Google Search Console is a must have account for owners of blogs and websites. It is critical for SEO because it helps webmasters understand Google’s view of their website or blog. And the great tools inside Google Search Console don’t stop at reporting. Google also provides multiple data points and suggestions for resolving issues you may not have even known existed.

When I first discovered Google Search Console I was thrilled. I was fascinated by the data it provided and it has only gotten better over the years. Google has done a really good job providing a mix of reporting, tools, and education all under one digital product.

Setting Up Your Google Search Console Account

Getting the account set up and running is much easier than most people think. If you already have an existing Google account for Gmail or Google Analytics, you’ll just log into this same account to set up Google Search Console.

If your website or blog is using the popular SEO plugin from Yoast, it’s virtually painless. The free version of Yoast’s SEO plugin will help you easily connect (and verify) your digital property to Google so you can start collecting data in Search Console.

In the next section I’ll be talking about each area of Google Search Console and why a website owner would use it. Before we go too in-depth, I would like to provide some high level lists of top tasks for utilizing this tool.

Getting Started With Google Search Console

- Add and verify your websites or blog

- Link Google Analytics to Google Search Console

- Add an XML Sitemap

- Validate your robot.txt file

Ongoing Maintenance Items

- Review Crawl Errors

- Validate your XML Sitemap is error free

- Review your Structure Data for errors

- Review suggestions and issues within HTML Improvements

- Review the inbound links to your site

There are many more things we can use Google Search Console for, but those are the must have steps I recommend to all website owners. Keep these in mind as we explore the features and functions next.

What’s Inside Google Search Console

If you’re like most website owners, you have limited time and resources to dedicate to sleuthing around in Google Search Console. Don’t fret! There is a lot of great information and tools waiting and it won’t take a physics degree to understand the information presented. Let’s review the individual sections of Google Search Console so you understand what each item is and why it can help with your SEO efforts.

The Search Appearance Section

The Search Appearance section will provide information on how your website is displayed in the search engine results page (also known as SERPs). The SERPs are your first opportunity to reach your audience so it is important that you get this data right.

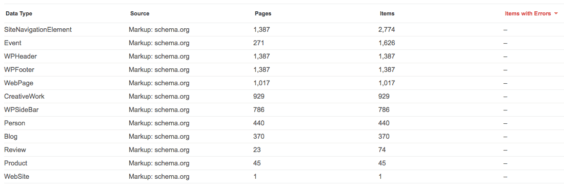

Structured Data – Structured data utilizes Schema.org vocabulary to provide labels for your website data. These labels help the search engines better understand your content, organize it, and display it is search. Structured data could be related to prices and ratings for products or dates and locations for events. Or it could pertain to Knowledge Graph content that appears at the very top of a search results page.

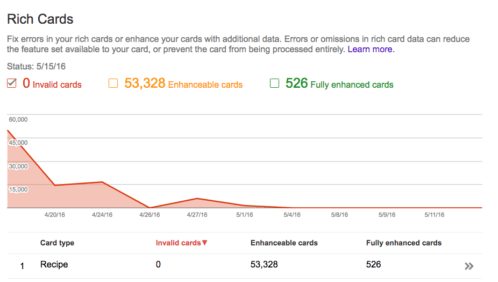

Rich Cards – A rich card is a more detailed of presentation of website data. It is designed to improve the standard search result with a more structured and visual preview of website content. This can be used for products, events, recipes, etc.

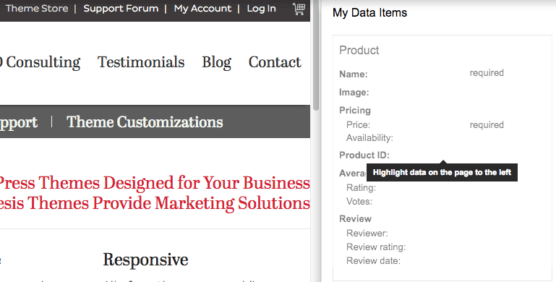

Data Highlighter – Designed to help you fix issues within Structured Data and/or highlight the structured data so Google can better understand it. It is an alternative to using a rich snippet plugin. This can be used for products, events, recipes, etc. If you are using WordPress plugins that produce this data (like Yoast’s WooCommerce plugin) you do not need to use this tool.

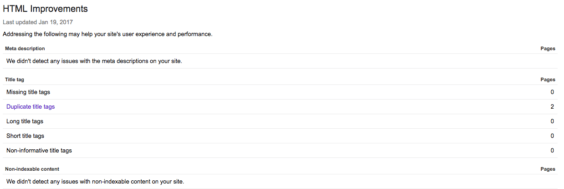

HTML Improvements – This area provides a list of recommended improvements to your meta title and descriptions. You’ll receive a list of which title and meta descriptions are too short or long, as well as any duplicates Google discovered. All of these should be reviewed and addressed.

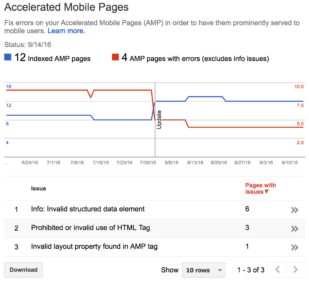

Accelerated Mobile Pages – This is commonly referred to as AMP. Accelerated Mobile Pages and is an open-source project dedicated to speeding up the mobile web. AMP is a way to make your pages easier accessible on mobile devices and thus faster to load for visitors. For AMP to work correctly you need to create valid AMP pages with the right schema.org markup and make sure they are properly linked with your website. Yoast offers some great articles on setting up and managing AMP pages for WordPress.

The Search Traffic Section

The Search Traffic section of Google Search Console will show information about the activity of your website or blog content. This includes both ranking, link data, and penalties.

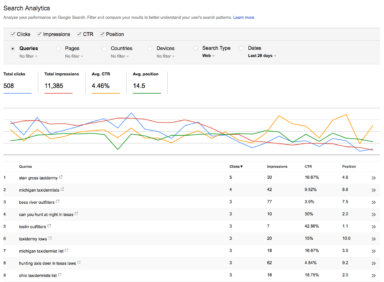

Search Analytics – This is probably the most valuable area in all of Google Search Console because it provides significant data on how your content is being displayed in search engine results pages. This area shows top pages and keywords, as well as ranking and click through rates. It also provides data comparisons so you can watch trends and catch issues quickly. The data can be exported to a spreadsheet so you can further analyze it offline.

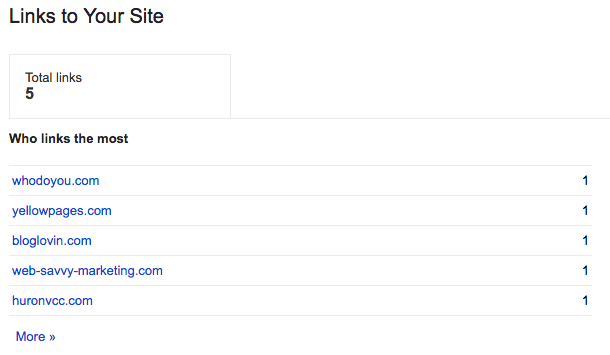

Links to Your Site – Inbound links have long been a ranking factor and while they do not have the same power they once did, they are still very valuable. This section of Google Search Console will show you links that Google has discovered from third party websites and blogs. These should be reviewed to make sure there are no surprises or links originating from less than desirable websites.

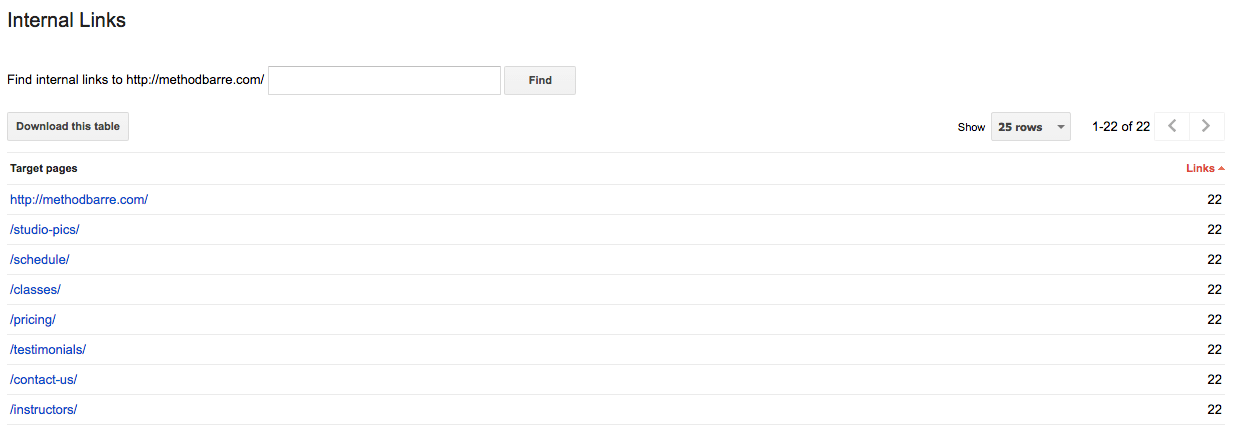

Internal Links – Google provides a list of the most linked content within a website or blog because it uses this data to determine the most important URLs and top content. The more links it sees to a give page or post, the more important it considers this content to be in relationship to other content within the website.

Manual Actions – A manual action is a situation where a human reviewer at Google has determined that pages on your website are not compliant with Google’s webmaster quality guidelines. Websites and blogs that have been flagged for issues can be demoted in search or removed entirely from Google’s search results. The Manual Actions are of Google Search Console will provide information on known issues as well as provide suggestions for addressing the problem. Why might you get a manual action? Top reasons are: hacked sites, user-generated spam, spammy freehosts, spammy structured markup, unnatural links to your site, thin content, cloaking or sneaky redirects, cloaking due to First Click Free violation, unnatural links from your site, cloaked images, hidden text, and keyword stuffing.

International Targeting – This area of Google Search Console can be used to help Google better understand a website’s country of focus. That said, it does not need to be used in many cases. If your website services multiple countries from the same website, you should not populate this data. If your website services a specific geographical area or you’ve hosted your website outside of your country of origin, then it is recommended you use this section to help Google understand where your website should be positioned in search.

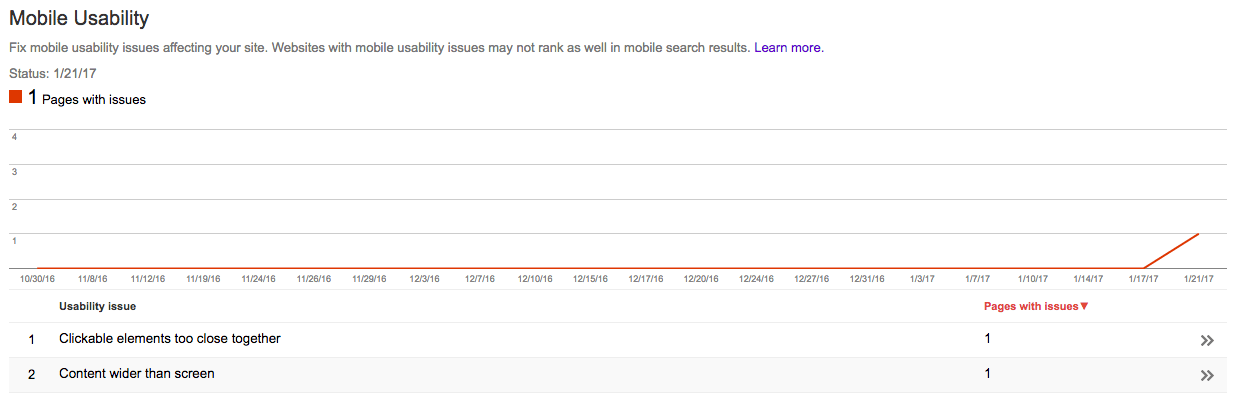

Mobile Usability – Google’s mobile usability report highlights URLs that have usability problems for visitors on mobile devices. The report will identify the usability issue, the URLs where the issue exists, as well as suggestions for correcting the problem. Mobile traffic dominates search and Google is becoming more and more focused on creating a quality user experience on mobile devices. Due to this, webmasters must take special note of this report and proactively work towards resolution of any issues found.

The Google Index Section

The Google Index section of Google Search Console will provide information on how your content is performing in the Google index. An indexed page refers to Google’s knowledge of page and that this content is available for display in the SERP. The index is the collection of those pages or posts available for display in SERPs.

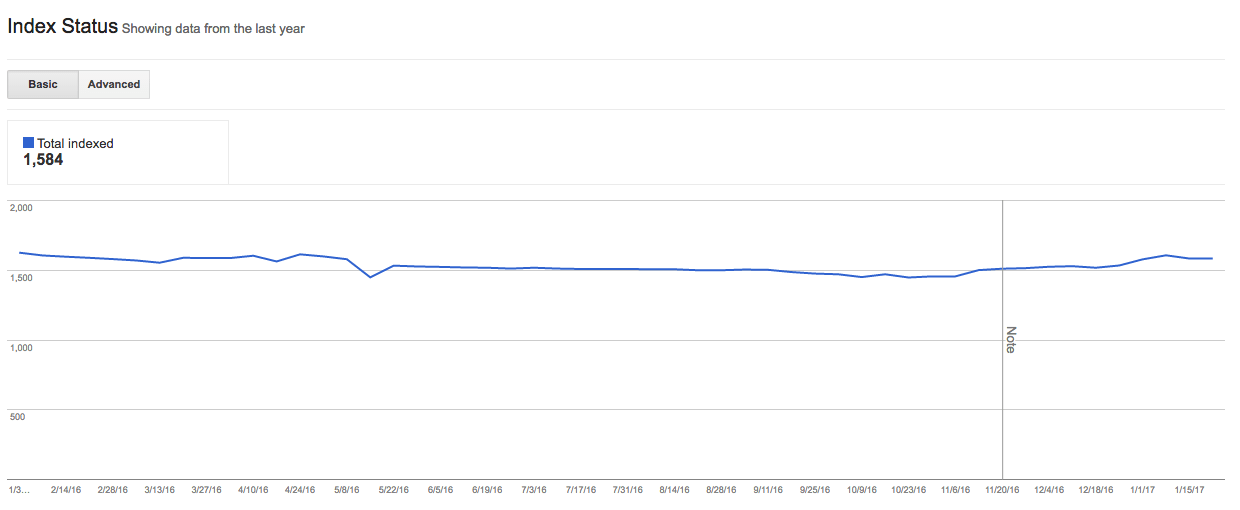

Index Status – The index status report provides overview data of the quantity of indexed content as well as the number of items that are being blocked from indexing. The total number of indexed content rarely matches that amount of content submitted to search engines and this discrepancy should not be a cause for alarm. Webmasters should use this report to be alerted of sudden drops in quantity or a sudden large increase in quantity. A major decrease could mean Google is unable to reach content, where a sudden increase could indicate your website has been hacked by a third party.

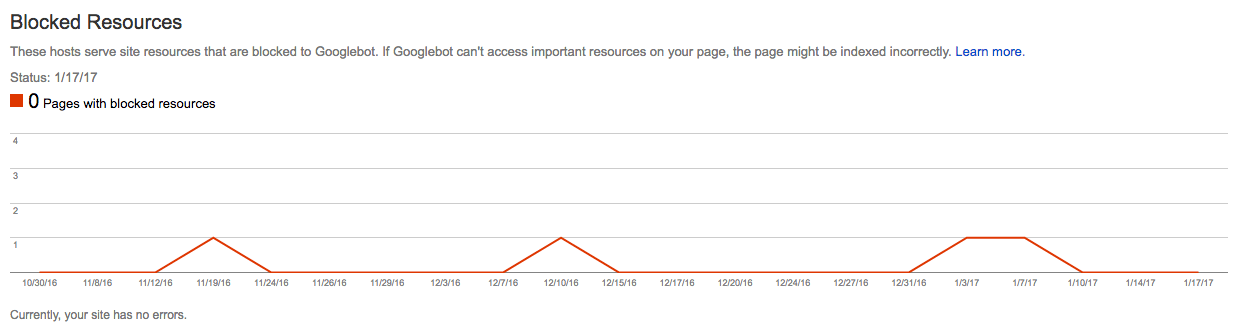

Blocked Resources – The blocked resources report shows website elements that are blocked to Googlebot and thus disallowing Google to access and index. Google does not show all blocked resources in this report and in turn only resources that it believes are within your control and require review.

Remove URLs – The remove URLs tool allows webmasters to temporarily block pages Google Search results. A successful request will only last about 90 days, so website owners should use a 404 error to permanently remove URLs from the SERPs. In most cases this tool should not be used. It is designed to accommodate urgent requests only and not intended for everyday usage.

Crawl

Google uses software known as crawlers to explore and discover website and blog content. The most well-known crawler is called “Googlebot.” Crawlers look at website content and follow links on those pages. They move very similar to a real person browsing the website. The crawlers job is to move from link to link and bring data about website content back to Google’s servers for indexing.

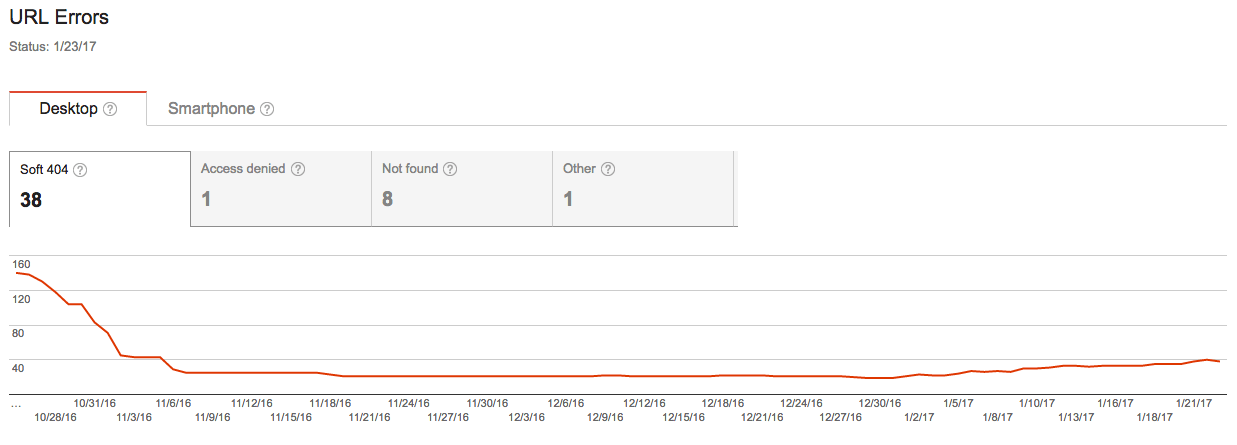

Crawl Errors – This report will show a listing of errors found by status code. These codes include server error where Google’s bot timed out, soft 404 errors where the content is not found by a hard 404 is not provided, not found, and blocked. Data is broken into desktop views as well as mobile presentations.

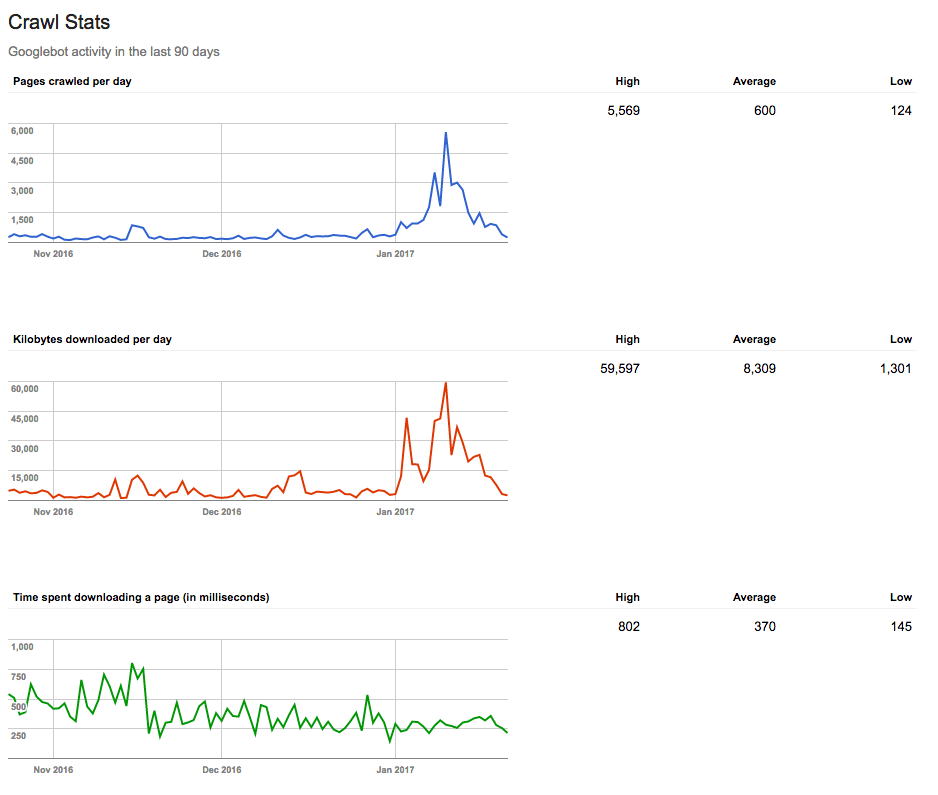

Crawl Stats – This report provides information on Googlebot’s activity within your website for the last 90 days. These data points will list content types and website elements such as CSS, JavaScript, Flash, PDF files, and images. This data is provided so webmasters can be aware of and investigate any sudden shifts up or down in crawl rates.

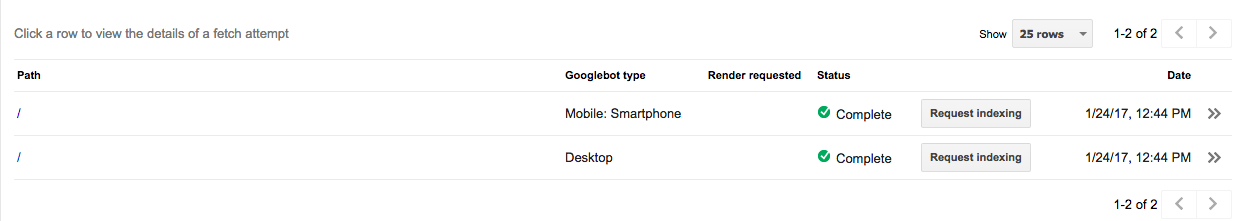

Fetch as Google – The Fetch as Google tool allows webmasters to see how Google sees a URL on their website. Users can run this tool to see whether the Googlebot can access a page, see how the page is rendered, and validate supplemental elements like images or scripts are accessible. Google provides both a desktop and mobile version of this tool.

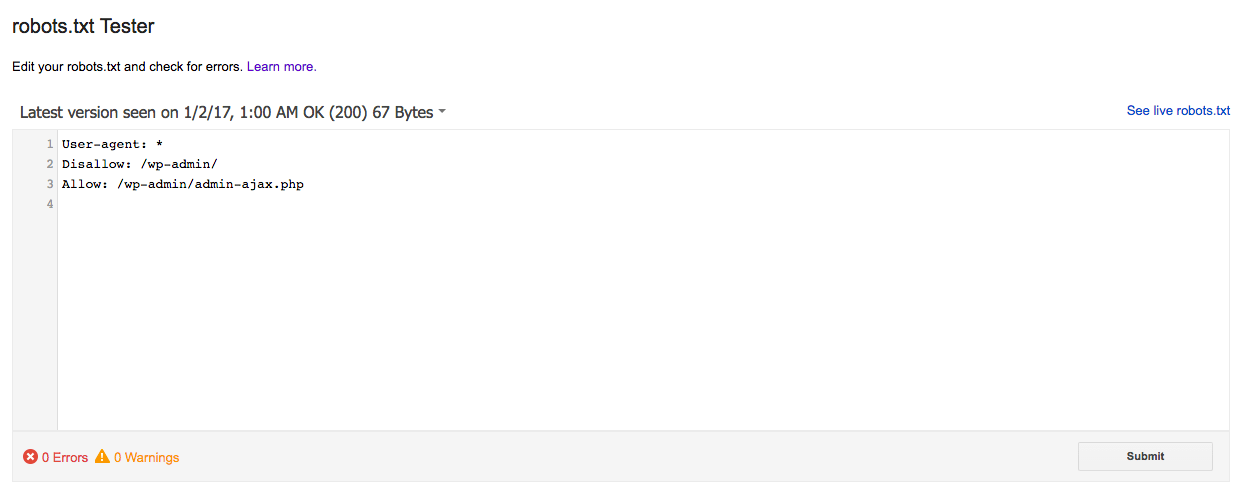

Robots.txt Tester – Robots.txt is a file at the root level (main URL) of a website that provides a list of areas within the website that the search engines may or may not crawl and index. It is very useful for blocking search engines from large sections of your website or server files. It is important to note that this file is providing a request and the search engines may or may not adhere to a website owners wishes.

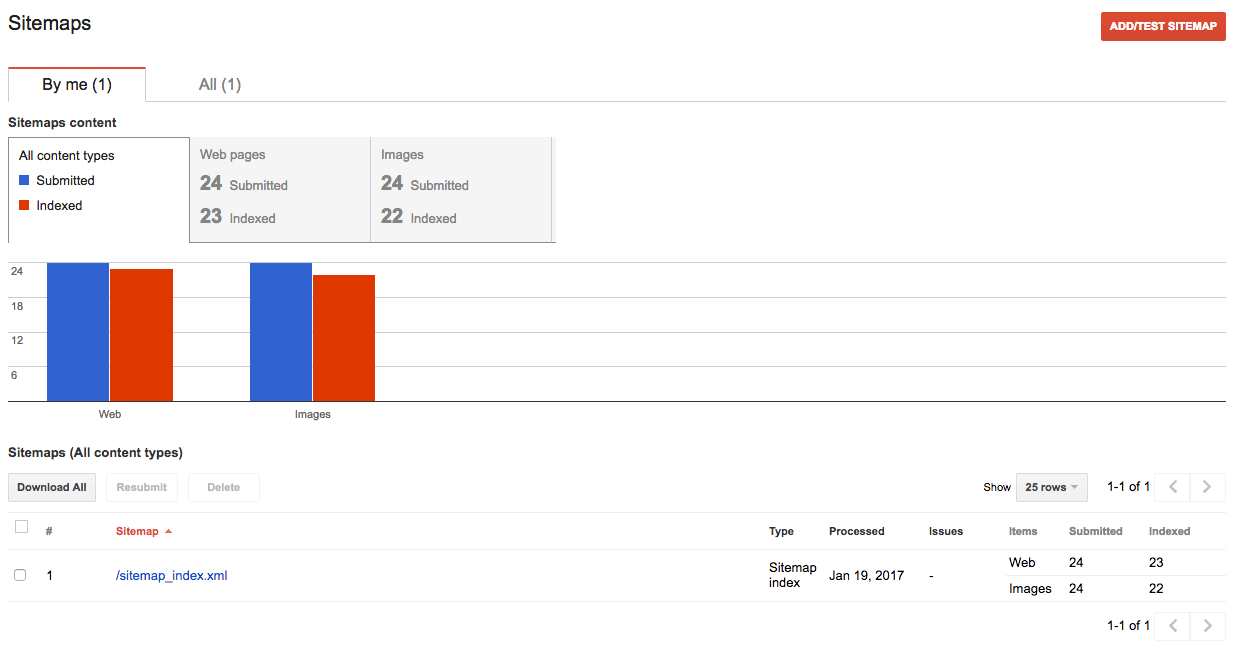

Sitemaps – This section of Google Search Console allows users to upload an XML sitemap for Google to use to crawl and index website content. It is not intended for HTML based sitemaps. XML sitemaps provide a roadmap for search engines to explore and index website content. In larger sites, these guides will distribute content into groups which allows search engines to better understand the nature of the content. Quality plugins (like Yoast) will automatically update the XML sitemap for any content additions, deletions, or updates.

URL Parameters – This tool can be used to instruct Google to crawl a preferred version of a URL or prevent Google from crawling duplicate content within a website. It most cases, webmasters do not need this tool and they are encouraged to not use it. This is because incorrectly instructing Google on preferred parameters can quickly remove large groups of content from the index and SERPs.

Security Issues

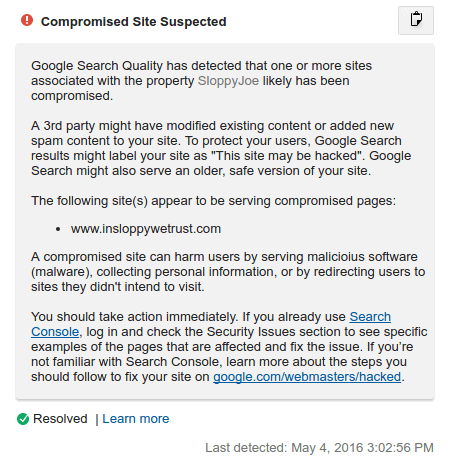

The security section of Google Search Console is used to inform webmasters of security issues Google has discovered. Webmasters can use this section to obtain information about the security issues Google discovered on their website, review the problem via code snippets, and request a second review and reconsideration once the malware or hacking has been resolved. Google will break down the code snippets into sections for malware code injections, SQL injections, or content injections and then provide URLs where these injections were discovered and the data they were found. This data can be very helpful in troubleshooting the malware and taking quick corrective action.

Learn More About Google Search Console

There is so much wonderful information available in Google Search Console and honestly, I’ve only touched the service of what it holds. It is hard to go to deep in one blog post as it can quickly become overwhelming.

Below is a webinar walk through of Google Search Console that I performed for my friends over at iThemes. I am doing one SEO webinar per month for them throughout 2017.

If you’d like to learn more about GSC and how you can use it in your SEO efforts, then just visit one of these locations for more information.

- Sign up for Google Search Console

- Google Search Console Help Center

- Watch Google’s introduction video GSC

- My Online SEO course

- SEO Bootcamp coming this May

The above webinar will take place Wednesday, but recorded versions will be available to all registrations.

Hi Rebecca, GREAT resource – thank you!

Question: I suddenly have a spike in blocked resources but they are all .CSS (coming from a WP site). Went from zero to 10 since December and only thing I did with this website is keep it up to date. I’ve gone ahead and added an ALLOW directive to the robots.txt to allow *.css, with the hopes that it will no longer block these files needed for rendering. However, it appears that one cannot REFRESH the Blocked Resources page – mine still shows the latest status date as almost a week ago. Do you know how to force a refresh in Search Console to see if the line added to the Robots.txt has resolved the Blocked Resources?

The time to clear can depend on a number of elements.

I would clear your cache and use Fetch as Google to try and speed up that process.

You can read more on this subject at https://support.google.com/webmasters/answer/6153277.